Home Value Predictions

The presentation below was completed by myself and three teammates for our final project in Ohio State's Statistics 4620 class, Introduction to Statistical Learning. This was an advanced class focusing on a variety of complex statistical learning models and concepts, from which we applied our learnings to complete the following project regarding the Ames Iowa Housing Dataset.

The problem at hand for this project was to use the Ames dataset to create a statistical model predicting the selling price of a home. This dataset contained many home attributes, ranging from square footage to number of rooms to lawn and garage type. Firstly, our group discussed our initial theories and expectations for housing data and home value prediction, which informed our initial round of variable elimination. Next, we inspected each individual attribute, eliminating those that had limited variety across categories, high amounts of missing data, or significant colinearity with other variables. We did several hand-coded variable transformations, especially for categorical variables that had uneven distributions across categories.

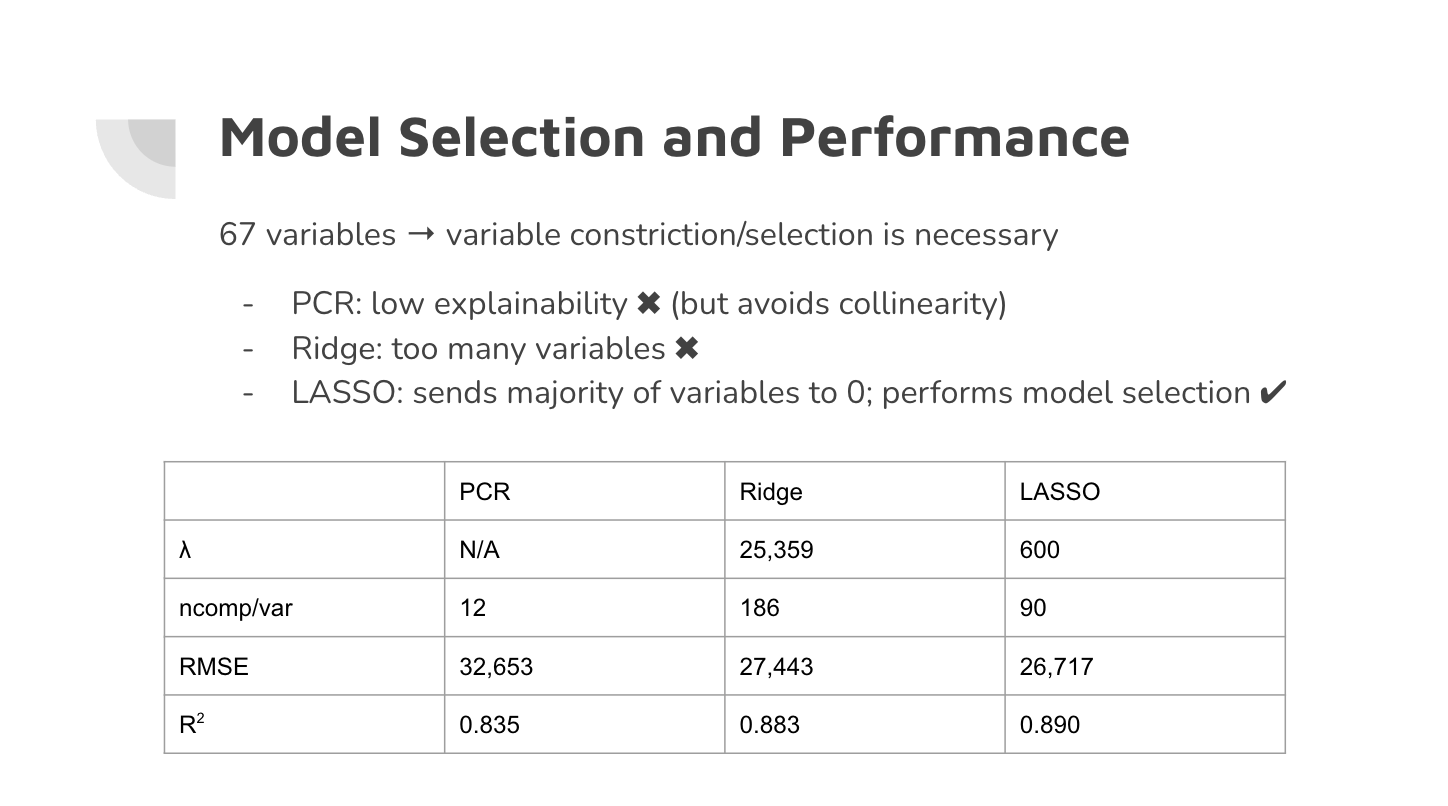

After completing our exploratory analyses and variable transformations, we moved into creating our model. Due to the fact that we had a significant amount of variables, we knew we needed to use a statistical model that could perform some sort of variable selection, or implement variable constraints. We considered three models, a Principal Components Regression, Ridge Regression, and LASSO Regression. We settled upon LASSO Regression after assessing predictive performance, as well as taking model qualities into consideration, such as the lack of ability for Principal Component Regression to provide easily interpreted results. This project was a great experience in developing our ability to produce a robust and informative statistical model from raw and uncleaned data as a team.

To see the finalized R code for this project, click here.